Day 4 - Google Search grounding with the Gemini API

Generative AI Agents

Copyright 2025 Google LLC.

# @title Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# https://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

Day 4 - Google Search grounding with the Gemini API

Welcome back to the Kaggle 5-day Generative AI course!

In this optional notebook, you will use Google Search results with the Gemini API in a technique called grounding, where the model is connected to verifiable sources of information. Using search grounding is similar to using the RAG system you implemented earlier in the week, but the Gemini API automates a lot of it for you. The model generates Google Search queries and invokes the searches automatically, retrieving relevant data from Google’s index of the web and providing links to search suggestions that support the query, so your users can verify the sources.

New in Gemini 2.0

Gemini 2.0 Flash provides a generous Google Search quota as part of the free tier. If you switch models back to 1.5, you will need to enable billing to use Grounding with Google Search, or you can try it out in AI Studio. See the earlier versions of this notebook for guidance.

Optional: Use Google AI Studio

If you wish to try out grounding with Google Search, follow this section to try it out using the AI Studio interface. Or skip ahead to the API section to try the feature here in your notebook.

Open AI Studio

Start by going to AI Studio. You should be in the “New chat” interface.

Search Grounding is best with gemini-2.0-flash, but try out gemini-1.5-flash too.

Ask a question

Now enter a prompt into the chat interface. Try asking something that is timely and might require recent information to answer, like a recent sport score. For this query, grounding will be disabled by default.

This screenshow shows the response for What were the top halloween costumes this year?. Every execution will be different but typically the model talks about 2023, and hedges its responses saying it doesn’t have access to specific information resulting in a general comment, rather than specific answers.

Enable grounding

On the right-hand sidebar, under the Tools section. Find and enable the Grounding option.

Now re-run your question by hovering over the user prompt in the chat history, and pressing the Gemini ✨ icon to re-run your prompt.

You should now see a response generated that references sources from Google Search.

Try your own queries

Explore this interface and try some other queries. Share what works well in the Discord! You can start from this blank template that has search grounding enabled.

The remaining steps require an API key with billing enabled. They are not required to complete this course; if you have tried grounding in AI Studio you are done for this notebook.

Use the API

Start by installing and importing the Gemini API Python SDK.

# Uninstall packages from Kaggle base image that are not needed.

!pip uninstall -qy jupyterlab jupyterlab-lsp

# Install the google-genai SDK for this codelab.

!pip install -qU 'google-genai==1.7.0'

[33mWARNING: Skipping jupyterlab as it is not installed.[0m[33m

[0m[33mWARNING: Skipping jupyterlab-lsp as it is not installed.[0m[33m

[0m

from google import genai

from google.genai import types

from IPython.display import Markdown, HTML, display

genai.__version__

'1.7.0'

Set up your API key

To run the following cell, your API key must be stored it in a Kaggle secret named GOOGLE_API_KEY.

If you don’t already have an API key, you can grab one from AI Studio. You can find detailed instructions in the docs.

To make the key available through Kaggle secrets, choose Secrets from the Add-ons menu and follow the instructions to add your key or enable it for this notebook.

from kaggle_secrets import UserSecretsClient

GOOGLE_API_KEY = UserSecretsClient().get_secret("GOOGLE_API_KEY")

client = genai.Client(api_key=GOOGLE_API_KEY)

If you received an error response along the lines of No user secrets exist for kernel id ..., then you need to add your API key via Add-ons, Secrets and enable it.

Automated retry

# Define a retry policy. The model might make multiple consecutive calls automatically

# for a complex query, this ensures the client retries if it hits quota limits.

from google.api_core import retry

is_retriable = lambda e: (isinstance(e, genai.errors.APIError) and e.code in {429, 503})

if not hasattr(genai.models.Models.generate_content, '__wrapped__'):

genai.models.Models.generate_content = retry.Retry(

predicate=is_retriable)(genai.models.Models.generate_content)

Use search grounding

Model support

Search grounding is available in a limited set of models. Find a model that supports it on the models page.

In this guide, you’ll use gemini-2.0-flash.

Make a request

To enable search grounding, you specify it as a tool: google_search. Like other tools, this is supplied as a parameter in GenerateContentConfig, and can be passed to generate_content calls as well as chats.create (for all chat turns) or chat.send_message (for specific turns).

|

|

# Ask for information without search grounding.

response = client.models.generate_content(

model='gemini-2.0-flash',

contents="When and where is Billie Eilish's next concert?")

Markdown(response.text)

Unfortunately, Billie Eilish does not have any upcoming concerts announced at this time. You can check her website and social media pages for any potential future announcements.

Now try with grounding enabled.

|

|

# And now re-run the same query with search grounding enabled.

config_with_search = types.GenerateContentConfig(

tools=[types.Tool(google_search=types.GoogleSearch())],

)

def query_with_grounding():

response = client.models.generate_content(

model='gemini-2.0-flash',

contents="When and where is Billie Eilish's next concert?",

config=config_with_search,

)

return response.candidates[0]

rc = query_with_grounding()

Markdown(rc.content.parts[0].text)

Billie Eilish’s next concert is on April 23, 2025, at the Avicii Arena in Stockholm, Sweden, as part of her “Hit Me Hard and Soft: The Tour”.

Response metadata

When search grounding is used, the model returns extra metadata that includes links to search suggestions, supporting documents and information on how the supporting documents were used.

Each “grounding chunk” represents information retrieved from Google Search that was used in the grounded generation request. Following the URI will take you to the source.

while not rc.grounding_metadata.grounding_supports or not rc.grounding_metadata.grounding_chunks:

# If incomplete grounding data was returned, retry.

rc = query_with_grounding()

chunks = rc.grounding_metadata.grounding_chunks

for chunk in chunks:

print(f'{chunk.web.title}: {chunk.web.uri}')

songkick.com: https://vertexaisearch.cloud.google.com/grounding-api-redirect/AWQVqALBuzPgWPk46pr0FjfcdKWyPSnn0-xenoJW8wtXHisSwVmmSYqBVm0529OwE8VxBePr99Ip0lzUJLLemRmeeSA8miQjwsixqwOHfgSDwYgH_CBrVMiv4w21qCW56l4BJqPm6U0j8FJLIu13DbwUxZ6_Yg3PWUFx0S7mEA==

ticketmaster.com: https://vertexaisearch.cloud.google.com/grounding-api-redirect/AWQVqAIa_URzM0mhO89R1AWT6lK-Mu0TpXn1LY8Dv4f5IcDjYyYl3_vVDtqsjOWWrG-1bXAWIPY9NNZP9Q5xuAfomzyPu5CFCflBT6fQmfI4SAMmDTApLbva9c5iGXYPycIdLgpGNES03kV_B8iOhBZiRuL7g1PcJ0ZtuVWDWKXT

As part of the response, there is a standalone styled HTML content block that you use to link back to relevant search suggestions related to the generation.

HTML(rc.grounding_metadata.search_entry_point.rendered_content)

The grounding_supports in the metadata provide a way for you to correlate the grounding chunks used to the generated output text.

from pprint import pprint

supports = rc.grounding_metadata.grounding_supports

for support in supports:

pprint(support.to_json_dict())

{'confidence_scores': [0.77666044, 0.86427045],

'grounding_chunk_indices': [0, 1],

'segment': {'end_index': 141,

'text': "Billie Eilish's next concert is on April 23, 2025, at "

'the Avicii Arena in Stockholm, Sweden, as part of her '

'"Hit Me Hard and Soft: The Tour".'}}

These supports can be used to highlight text in the response, or build tables of footnotes.

import io

markdown_buffer = io.StringIO()

# Print the text with footnote markers.

markdown_buffer.write("Supported text:\n\n")

for support in supports:

markdown_buffer.write(" * ")

markdown_buffer.write(

rc.content.parts[0].text[support.segment.start_index : support.segment.end_index]

)

for i in support.grounding_chunk_indices:

chunk = chunks[i].web

markdown_buffer.write(f"<sup>[{i+1}]</sup>")

markdown_buffer.write("\n\n")

# And print the footnotes.

markdown_buffer.write("Citations:\n\n")

for i, chunk in enumerate(chunks, start=1):

markdown_buffer.write(f"{i}. [{chunk.web.title}]({chunk.web.uri})\n")

Markdown(markdown_buffer.getvalue())

Supported text:

- Billie Eilish’s next concert is on April 23, 2025, at the Avicii Arena in Stockholm, Sweden, as part of her “Hit Me Hard and Soft: The Tour”.[1][2]

Citations:

Search with tools

In this example, you’ll use enable the Google Search grounding tool and the code generation tool across two steps. In the first step, the model will use Google Search to find the requested information and then in the follow-up question, it generates code to plot the results.

This usage includes textual, visual and code parts, so first define a function to help visualise these.

from IPython.display import display, Image, Markdown

def show_response(response):

for p in response.candidates[0].content.parts:

if p.text:

display(Markdown(p.text))

elif p.inline_data:

display(Image(p.inline_data.data))

else:

print(p.to_json_dict())

display(Markdown('----'))

Now start a chat asking for some information. Here you provide the Google Search tool so that the model can look up data from Google’s Search index.

config_with_search = types.GenerateContentConfig(

tools=[types.Tool(google_search=types.GoogleSearch())],

temperature=0.0,

)

chat = client.chats.create(model='gemini-2.0-flash')

response = chat.send_message(

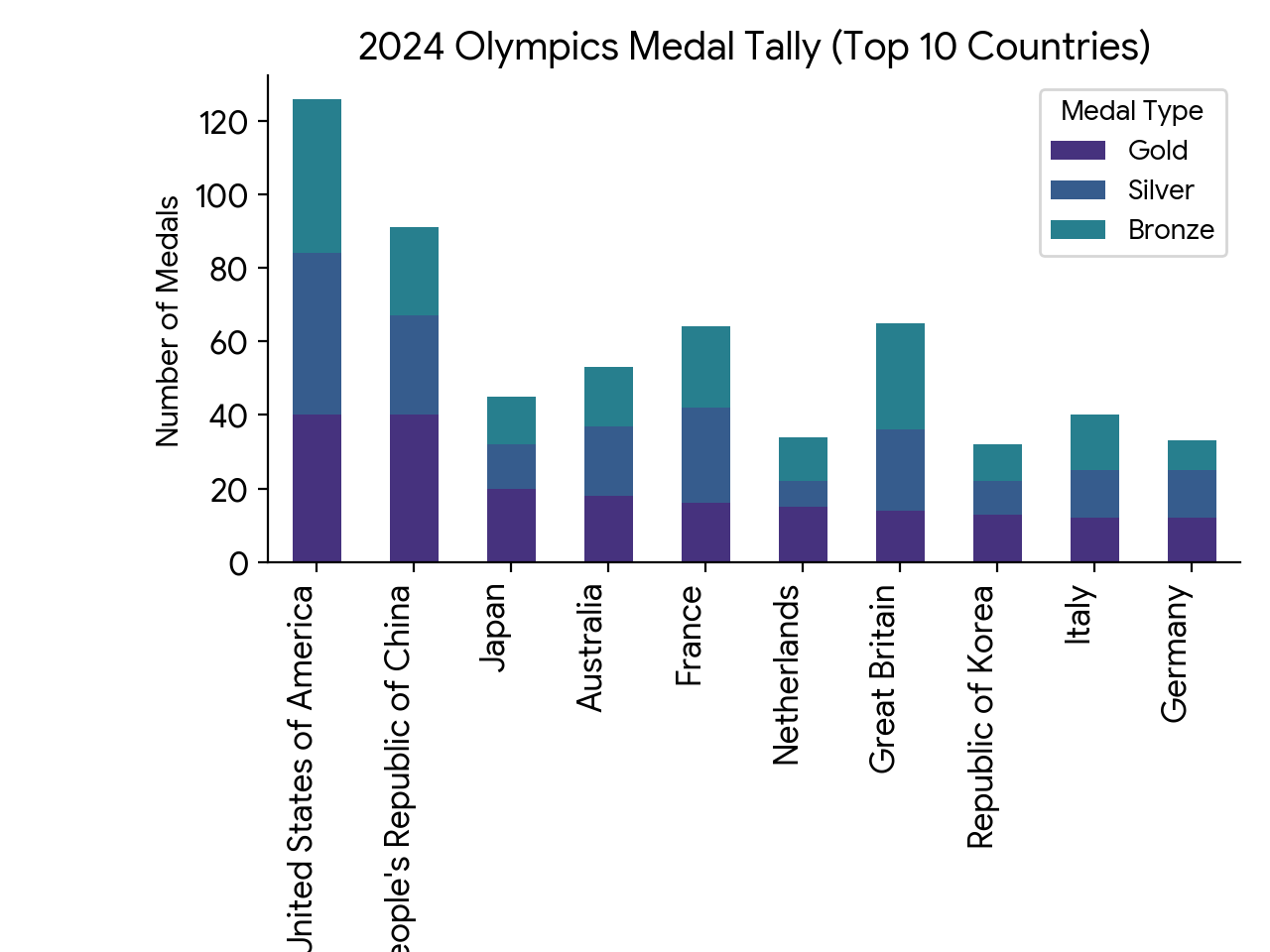

message="What were the medal tallies, by top-10 countries, for the 2024 olympics?",

config=config_with_search,

)

show_response(response)

Here are the top 10 countries by medal tally at the 2024 Paris Olympics:

- United States of America: 40 Gold, 44 Silver, 42 Bronze, Total 126

- People’s Republic of China: 40 Gold, 27 Silver, 24 Bronze, Total 91

- Japan: 20 Gold, 12 Silver, 13 Bronze, Total 45

- Australia: 18 Gold, 19 Silver, 16 Bronze, Total 53

- France: 16 Gold, 26 Silver, 22 Bronze, Total 64

- Netherlands: 15 Gold, 7 Silver, 12 Bronze, Total 34

- Great Britain: 14 Gold, 22 Silver, 29 Bronze, Total 65

- Republic of Korea: 13 Gold, 9 Silver, 10 Bronze, Total 32

- Italy: 12 Gold, 13 Silver, 15 Bronze, Total 40

- Germany: 12 Gold, 13 Silver, 8 Bronze, Total 33

Continuing the chat, now ask the model to convert the data into a chart. The code_execution tool is able to generate code to draw charts, execute that code and return the image. You can see the executed code in the executable_code part of the response.

Combining results from Google Search with tools like live plotting can enable very powerful use cases that require very little code to run.

config_with_code = types.GenerateContentConfig(

tools=[types.Tool(code_execution=types.ToolCodeExecution())],

temperature=0.0,

)

response = chat.send_message(

message="Now plot this as a seaborn chart. Break out the medals too.",

config=config_with_code,

)

show_response(response)

Okay, I can help you visualize this data using a Seaborn chart. I’ll use a stacked bar chart to represent the medal distribution for each country.

{'executable_code': {'code': 'import pandas as pd\nimport seaborn as sns\nimport matplotlib.pyplot as plt\n\n# Data from the previous response\ndata = {\n \'Country\': [\'United States of America\', \'People\\\'s Republic of China\', \'Japan\', \'Australia\', \'France\', \'Netherlands\', \'Great Britain\', \'Republic of Korea\', \'Italy\', \'Germany\'],\n \'Gold\': [40, 40, 20, 18, 16, 15, 14, 13, 12, 12],\n \'Silver\': [44, 27, 12, 19, 26, 7, 22, 9, 13, 13],\n \'Bronze\': [42, 24, 13, 16, 22, 12, 29, 10, 15, 8]\n}\n\ndf = pd.DataFrame(data)\ndf = df.set_index(\'Country\')\n\n# Plotting the stacked bar chart\nplt.figure(figsize=(12, 8)) # Adjust figure size for better readability\nsns.set_palette("viridis") # Choose a color palette\ndf.plot(kind=\'bar\', stacked=True)\n\nplt.title(\'2024 Olympics Medal Tally (Top 10 Countries)\')\nplt.xlabel(\'Country\')\nplt.ylabel(\'Number of Medals\')\nplt.xticks(rotation=45, ha=\'right\') # Rotate x-axis labels for readability\nplt.legend(title=\'Medal Type\')\nplt.tight_layout() # Adjust layout to prevent labels from overlapping\nplt.show()\n', 'language': 'PYTHON'}}

The stacked bar chart visually represents the medal distribution for the top 10 countries at the 2024 Olympics. Each country has a bar, and the bar is divided into segments representing the number of Gold, Silver, and Bronze medals won. This allows for a quick comparison of the total medals and the composition of medals for each country.

Further reading

When using search grounding, there are some specific requirements that you must follow, including when and how to show search suggestions, and how to use the grounding links. Be sure to read and follow the details in the search grounding capability guide and the search suggestions guide.

Also check out some more compelling examples of using search grounding with the Live API in the cookbook, like this example that uses Google Maps to plot Search results on a map in an audio conversation, or this example that builds a comprehensive research report.

- Mark McD